Universal Password Wipes Out All Safeguards

One buried password became the master key—exposing every account and leaving no trail

A single credential buried in source code became a universal backdoor—leaving no logs, no audit trail, and no way to know who was truly accessing your users.

I had been advising a startup founder that was working primarily with an offshore team. During one of our discussions he mentioned that there was a hard coded password for the application.

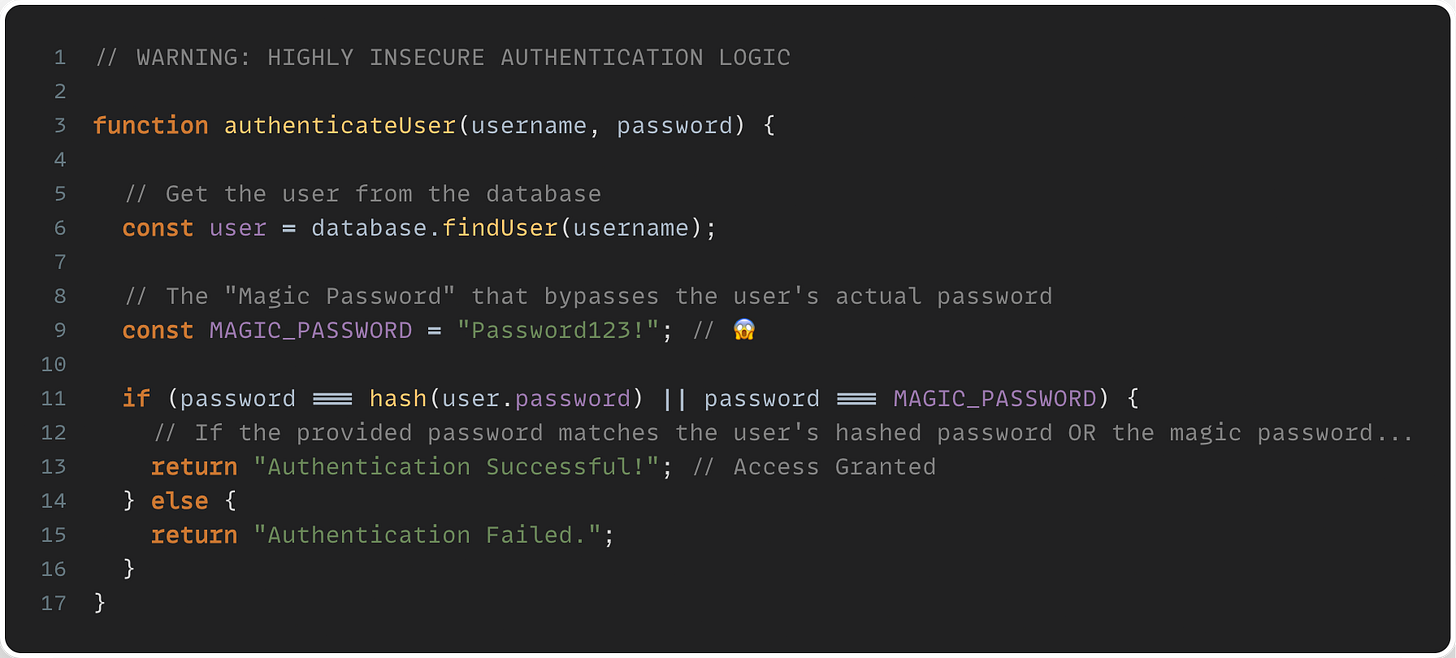

We should all know that hardcoded credentials are horrible - especially when it’s in your own application.

But it got worse. Was there a hard coded password for just a single account? Nope, it was a special password that allowed access to any account in the system.

That’s when I got very concerned. This web-based, internet accessible application had a password which would allow access to any account. It was embedded within the actual source code. It was the same all environments: local, staging, and production.

This means that any developer or anyone with access to the source code now has a magical password that will allow access to any account, even the most privileged admin accounts. To make matters worse, it wasn't even a very good password.

As I looked further, there was also no logging and no indication as to whether or not access to an account was authorized by the rightful owner with the owners password, or this magical password.

There was no audit trail and no accountability.

Why?!?!

Once I determined the full scope of what was going on, the next question was: why does this functionality exist???

The short answer is that there wasn’t a great answer. The development team thought that it might be useful to be able to impersonate other users in order to investigate problems. While it’s true that there are some useful cases for account impersonation, the entire implementation was very flawed.

What Could Go Wrong?

Before we start making the situation better, let's go over some of the things that could go seriously wrong with the current implementation. Once we do that, we'll know some of the things we want to fix.

Developer Mistakes or Abuse

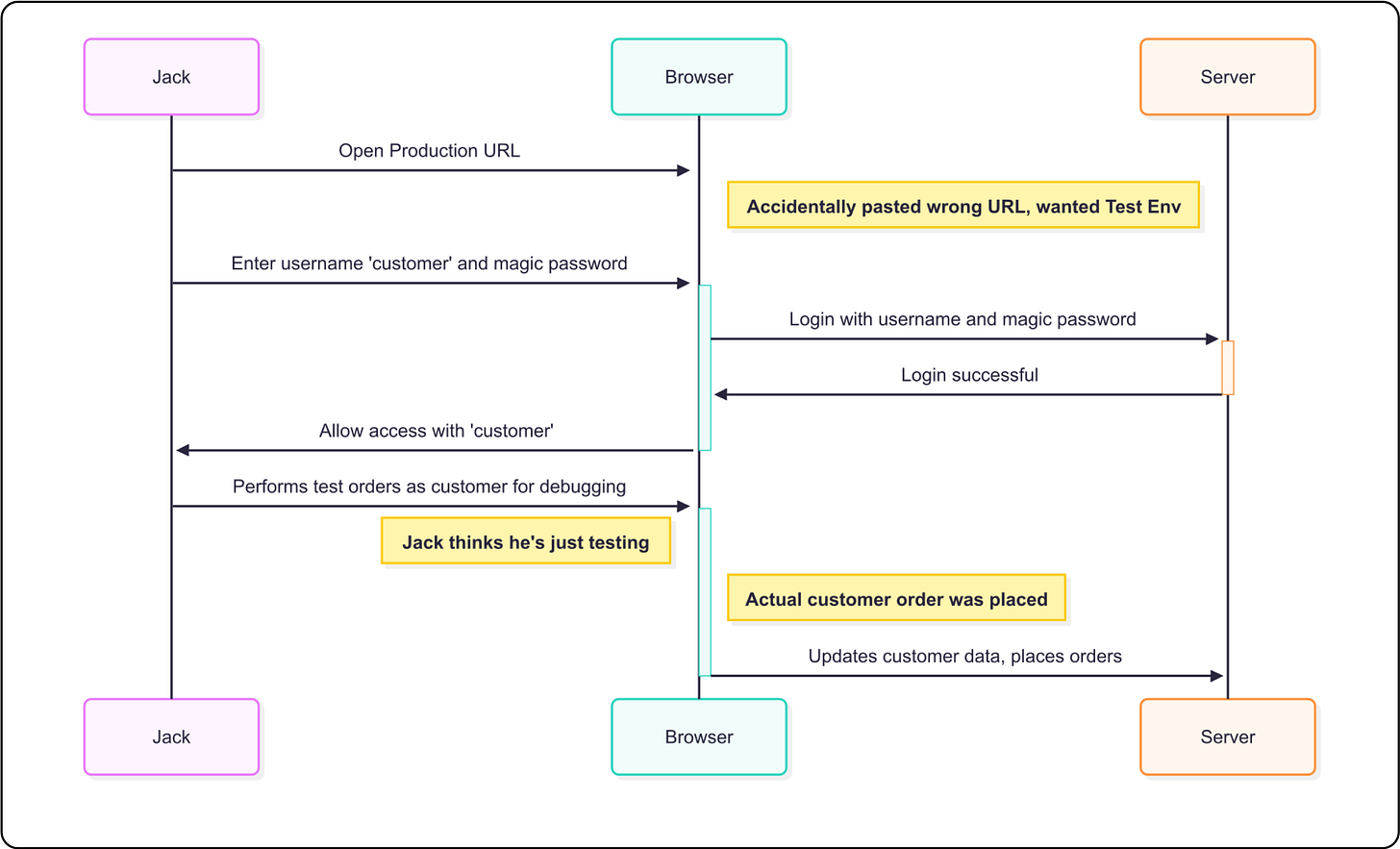

Let's take the most obvious thing that could go wrong: the development team making mistakes or mis-using the magic password. Remember, since the password is hardcoded, it's the same for all environments. In this scenario, let's say a developer with completely good intentions wants to do some testing in a development environment, but the url to the environment was mistakenly copied or auto completed to the production environment without being noticed. The developer proceeds to perform actions on behalf of a real user without noticing that's it's a real, live production account.

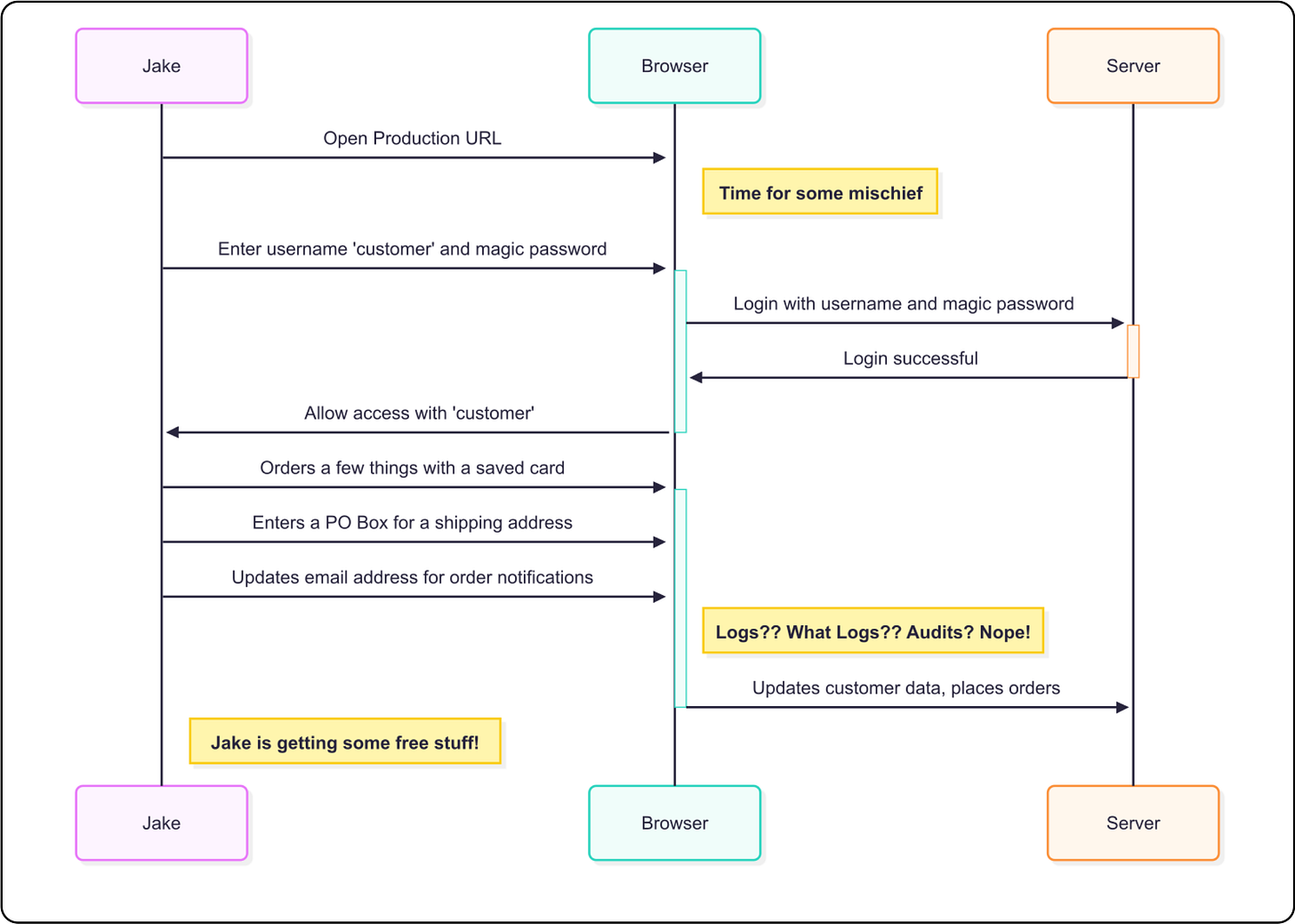

A scenario also exists where a malicious developer takes action as a real production account using the magic password. There are any number of way to take advantage of this situation. The developer could want to make a purchase as the user and have it sent to a different address. The developer might want to re-route an existing order.

In both of these cases, there is no auditing, no logging, no alarms going off to tell anyone that this is happening. Both of these scenarios are possible because the developers have access to the single magic password. There is no way to allow for a different password across environments. Everyone has access to everything.

Accidental Secret Leaking

Another bad scenario can occur while using AI coding assistants. Code, including secrets, gets sent out to a variety of LLMs. These secrets can then make their way into the data that the LLMs use to respond to other requests. It's a possibility that these secrets could end up with people outside of your organization. It’s also entirely possible for a user to leak source control access tokens which would allow users outside of the organization to access all accounts as well.

There have been countless instances of accidentally leaked tokens, mis-configured repositories, and malicious third party packages that will leak or steal secrets and allow access to a private repository.

Cumbersome Secret Updating

Let's say that a developer leaves the team. You'd probably want to change the magic password, this way the developer can no longer access any of the accounts. Well, here is another downside to hard coding the password. The only way to change the password is to update the code and re-deploy the application to every environment.

This will also change the password for all environments, requiring everyone to use the new password. You’ll also have to be sure that the new code gets to every environment in a short timeframe.

Fixing User Impersonation

There is a very long list of features we’d want to add to our authentication and impersonation implementation.

Auditing

Logging

Alerting

Which users can impersonate

Separate credentials

MFA check each time

IP Whitelist or Region Whitelist

Short session timeouts

This is a pretty long list, and some of these features can be a bit of work to get right. We won’t try and tackle the implementation of all of them here, but..

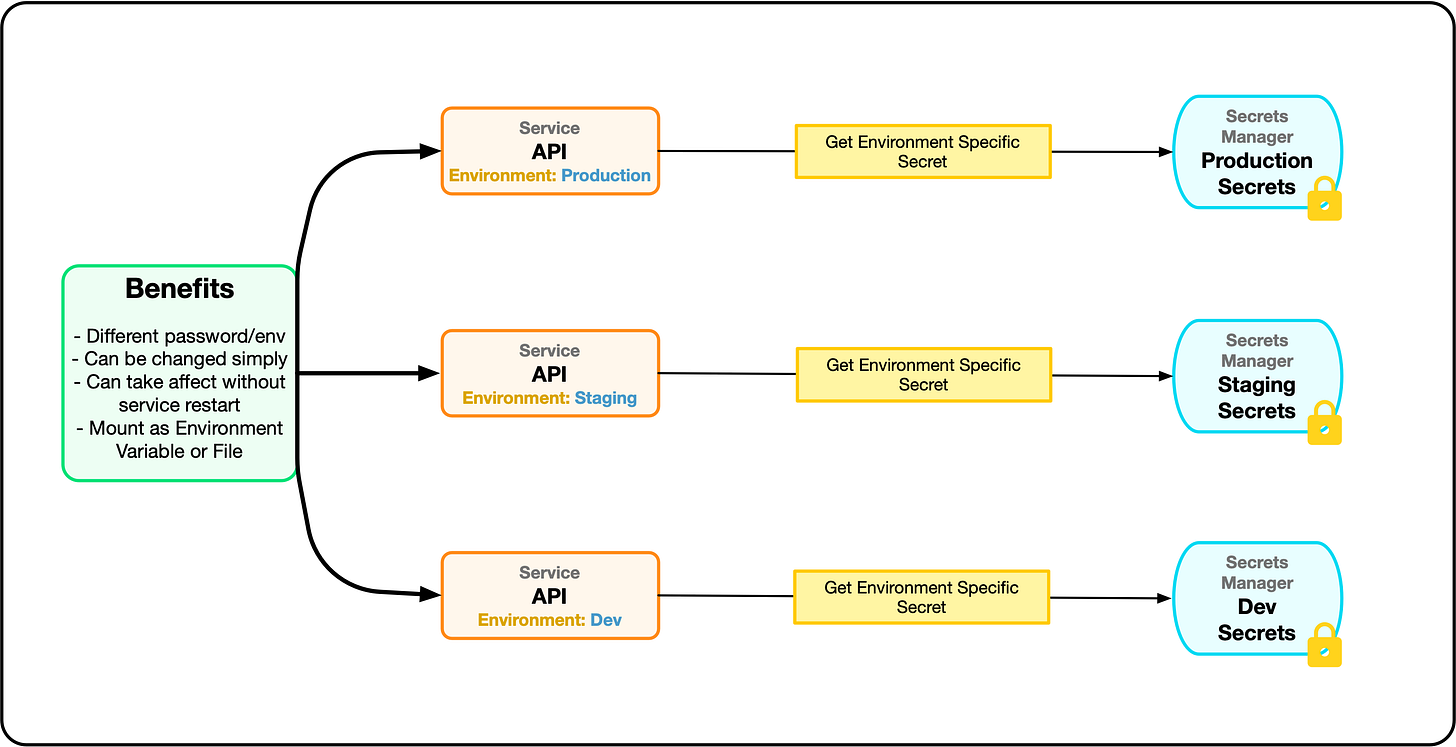

First Thing’s First

If you have time to do nothing else in the short term, remove the hard-coded password. Move it to an external environment variable or file. Store it and load it from a secrets manager so that it can vary per environment. This is still not a great solution, but it’s certainly better than a hard-coded password.

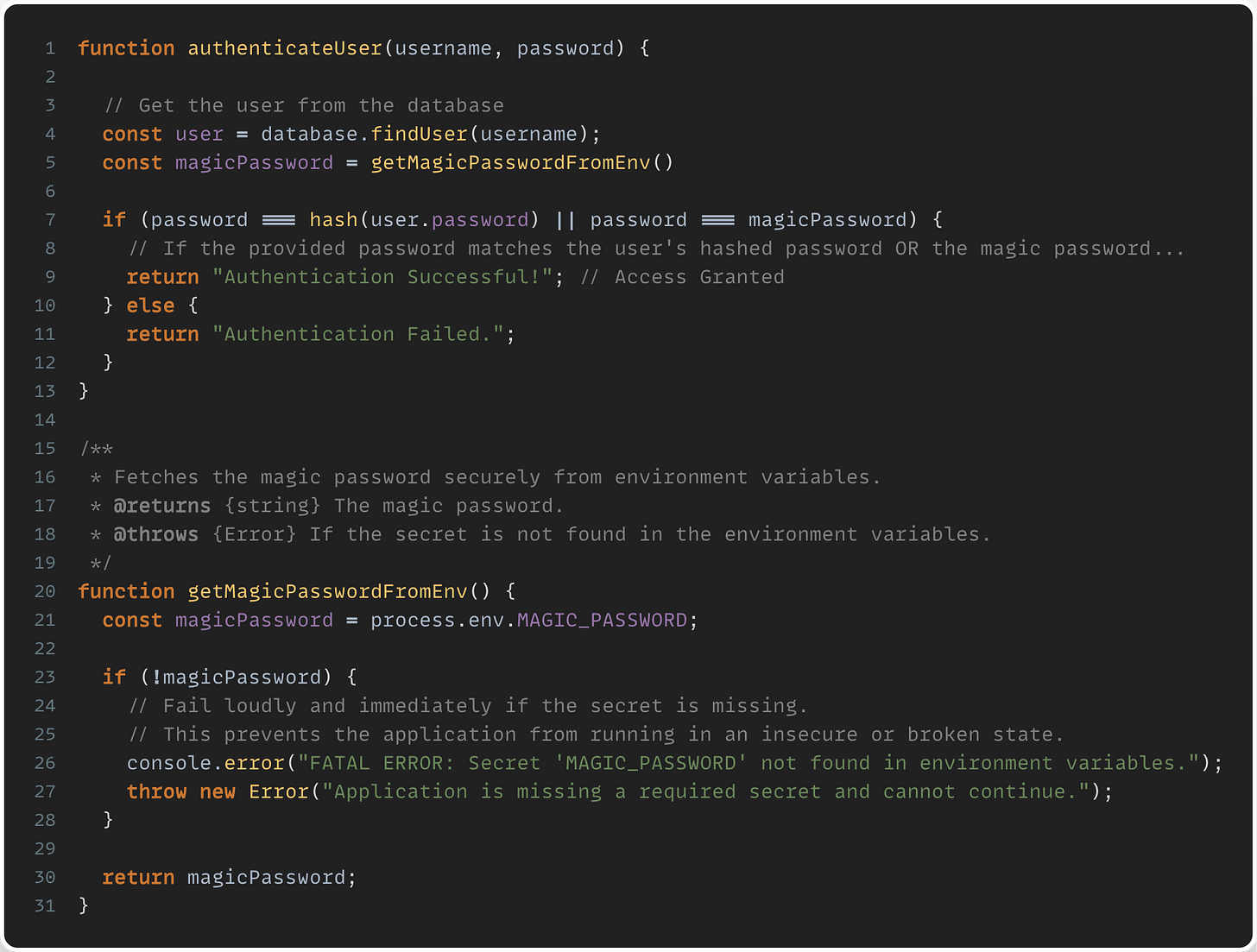

This code sample below demonstrates reading the secret from an environment variable. This is still the most common way to get secrets into an application. Most orchestration tools have ways to load or mount secrets into an environment variable. As a fallback, when there is no mechanism to automatically loading secrets into environment variables, an init process can read the secrets from a secrets manager using an SDK and set environment variables before the application starts.

Although this is still the most common way, it does have downsides. It can be cumbersome to support lots of secrets as environment variables. When secrets change, it also can require restarting the application to pick up the new values for the secrets. The only exception to this is where that application reads from a secrets manager and then sets environment variables. This can then be used to re-read and re-set the environment as the application is running.

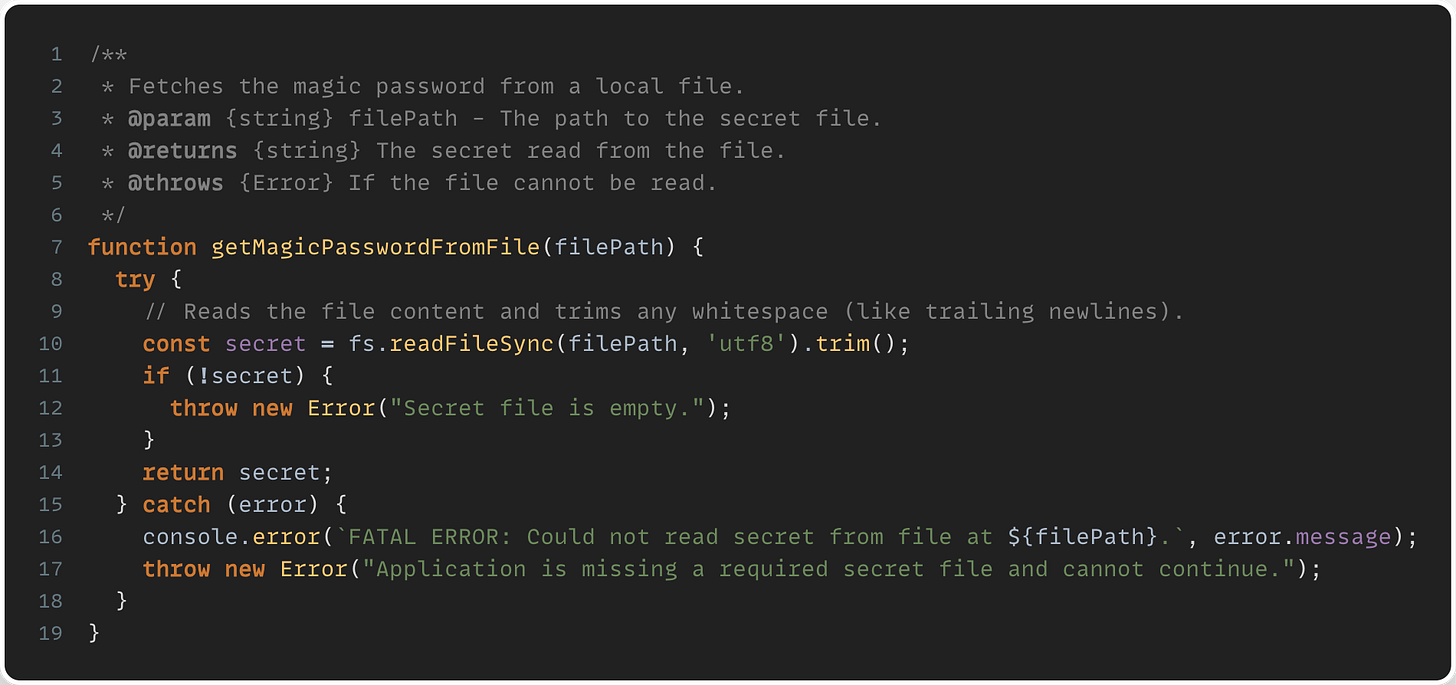

A better alternative would be to load or mount secrets into a file that can only be read by the application process. The code sample below demonstrates this. Using a file has a few benefits. Multiple related values can be added to the same secret, which simplifies secrets management. It’s also possible to watch the file and reload the file if secrets change without restarting the service.

Whichever way is chosen here, it’s better than a hard coded password. This is still a flawed method though.

Switching to User-based Impersonation Flows

To better secure user impersonation, you’ll want to remove the magic password altogether. You’ll want to put mechanisms into place that will allow users to log in with their own passwords, and then transition to impersonating an allowed set of users.

Allowing individual users to impersonate other users is a bit more work than the previous method, but it has a lot of benefits. This model also has the most variation, so I won’t be able to go over every detail here.

You’ll want to start with a setting that determines whether or not a user is allowed to impersonate other users. As part of this setting, you’ll also want to include attributes of the users that are allowed to be impersonated such as maximum role and organization. There are likely other attributes specific to your application that will also be important.

If your application supports MFA, you may want to require an explicit MFA check before allowing the user to start the impersonation session. The safest MFA checks in this context would be either passkey-based methods or hardware MFA checks. You might also want to limit the time an impersonation session can last. Depending on how you’re representing authentication detail, this could be details in a cookie, or a JWT expiration.

Auditing, Logging, and Alerting

We need to make sure we can track when an impersonation occurs. Logs should be generated when an impersonation session begins. Each action taken by a user should also be logged with additional information that includes the fact that it’s being done within an impersonation session and the user doing the impersonation.

Depending on the sensitivity of the application, it might also be worth having an alerts channel in email, slack or a notification stream that can surface the start of an impersonation session for general visibility.

One of the best ways to help ensure that there aren’t any loopholes and that no one is trying to snoop around for ways to circumvent authentication or authorization is to have good auditing, observability, and alerting.

This section can apply to either a impersonation model: the secret per environment or the user-based impersonation model. There will likely be more detail available to you with the user-based impersonation model.

Next Steps

There’s been a lot here. Hopefully this helps to demonstrate some of the issues that can occur with user impersonation schemes. There are a few things to keep in mind however.

This does not cover every scenario

There are a lot of good options that exist where you don’t need to directly implement the solution

Use an existing IdP if possible

Point #3 is important. In most cases, user management and impersonation is not part of the core domain of your application. There are a lot of small, important details to keep track of in the realm of user management, authentication, authorization, and impersonation. It can take a lot of time and effort to build a secure system. It may well be worth looking into third party options before embarking on building your own.

If you are going to build you own, ensure that there is good logging and alerting. Auditing logins and sensitive operations is just as important. Without visibility, it’s very hard to ensure that your authentication system is not being taken advantage of.